|

|

|

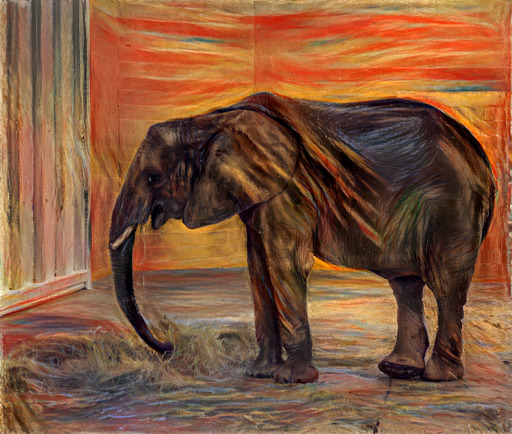

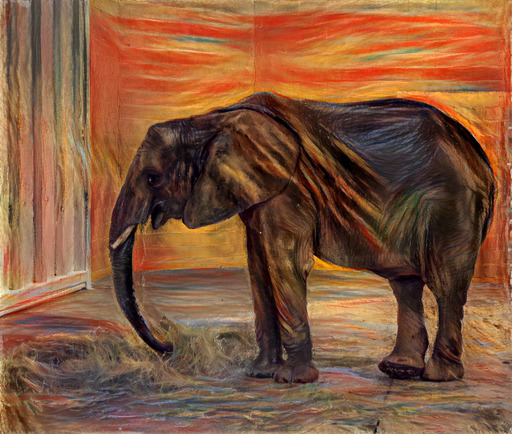

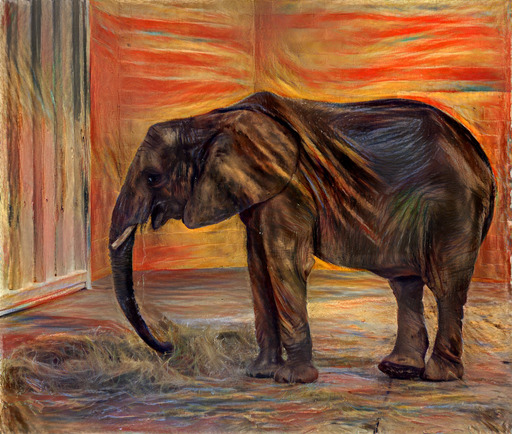

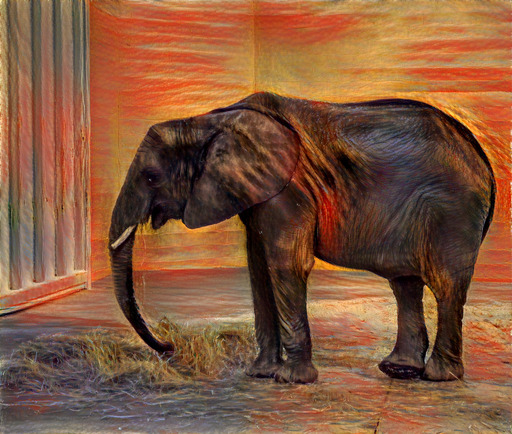

| 32-bit float (no quantization) | 8-bit | 7-bit |

|

|

|

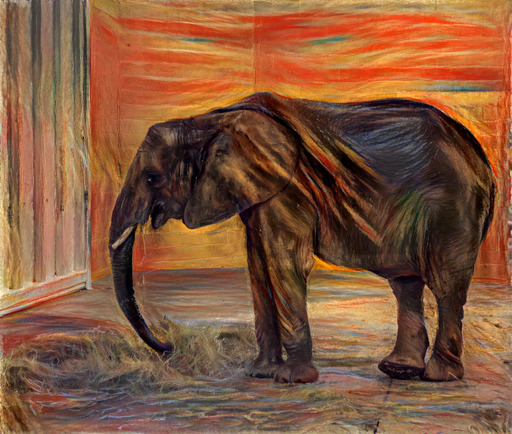

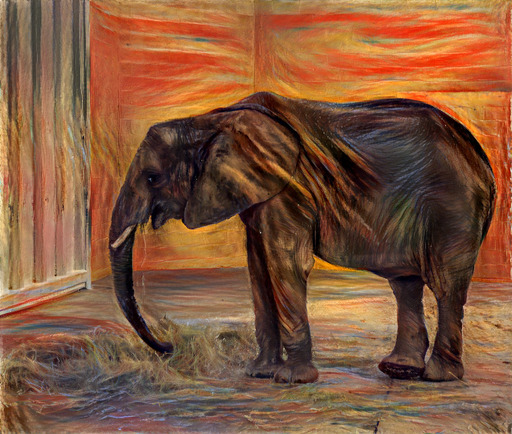

| 6-bit | 5-bit | 4-bit |

|

|

|

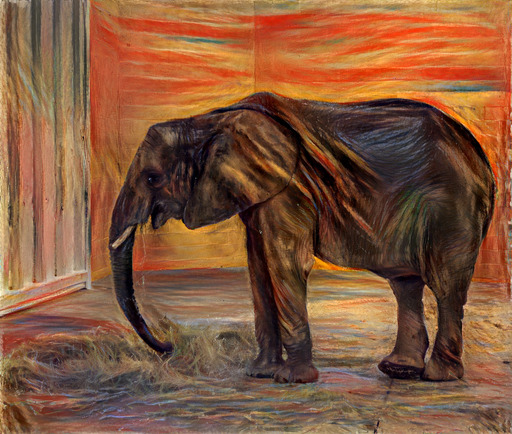

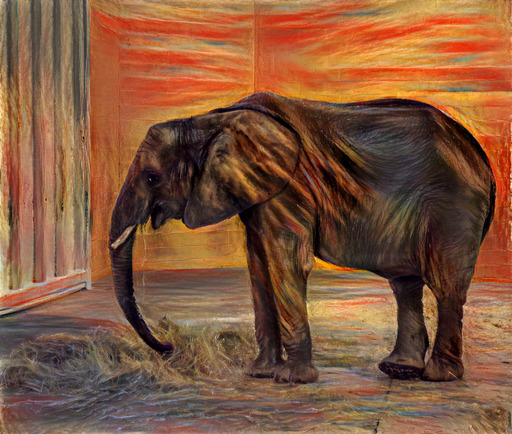

| 3-bit | 2-bit | 1-bit |

I recently implemented pastiche—discussed in a prior post—for applying neural style transfer. I encountered a size limit when uploading the library to PyPI, as a package cannot exceed 60MB. The 32-bit floating point weights for the underlying VGG model [1] were contained in an 80MB file. My package was subsequently approved for a size limit increase that could accommodate the VGG weights as-is, but I was still interested in compressing the model.

Various techniques have been proposed for compressing neural networks—including distillation [2] and quantization [3,4]—which have been shown to work well in the context of classification. My problem was in the context of style transfer, so I was not sure how model compression would impact the results.

Experiments

I decided to experiment with weight quantization, using a scheme where I could store the quantized weights on disk, and then uncompress the weights to full 32-bit floats at runtime. This quantization scheme would allow me to continue using my existing code after the model is loaded. I am not targeting environments where memory is a constraint, so I was not particularly interested in approaches that would also reduce the model footprint at runtime. I used kmeans1d—discussed in a prior post—for quantizing each layer’s weights.

Before I implemented support for loading a quantized VGG model, I first ran experiments to see how different levels of compression would impact style transfer. I did not conduct extensive experiments—just a few style transfers at different levels of compression. quantize.py creates updated VGG models with simulated quantization, and quantized_pastiche.sh runs style transfer using the updated VGG models. These scripts are in a separate branch I created for the experiments.

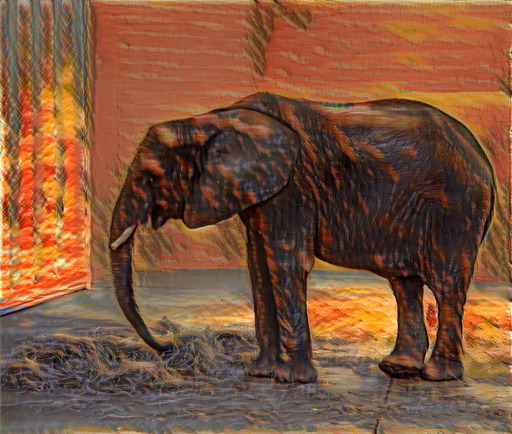

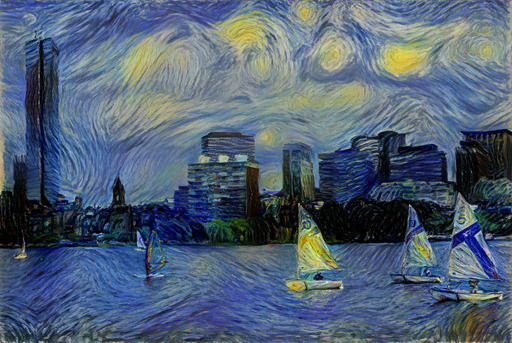

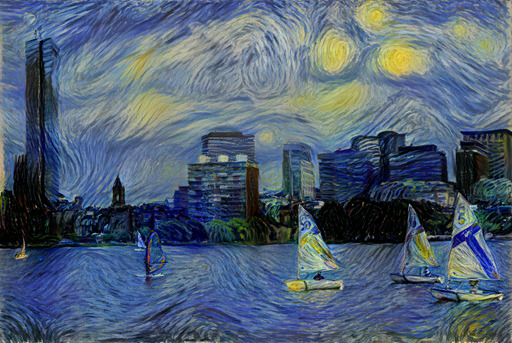

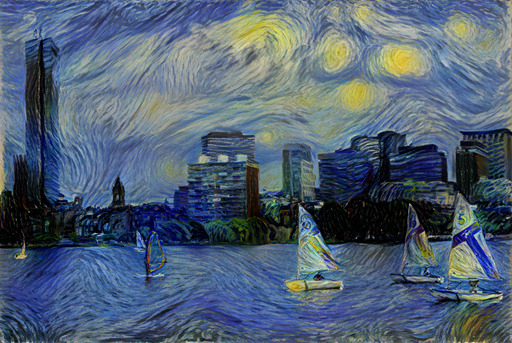

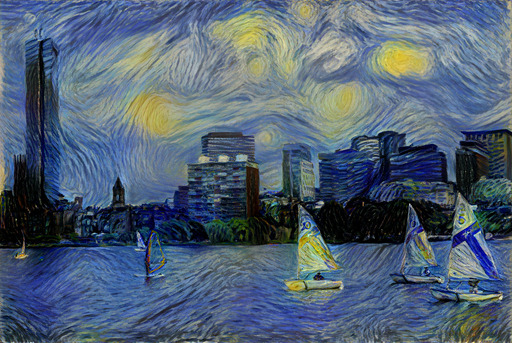

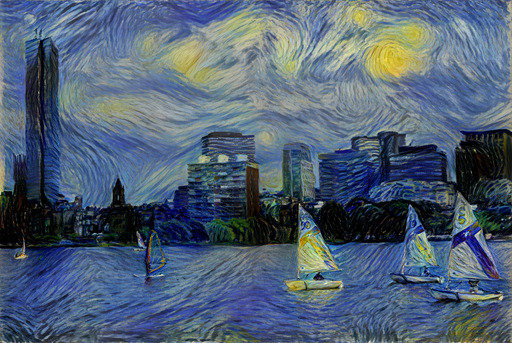

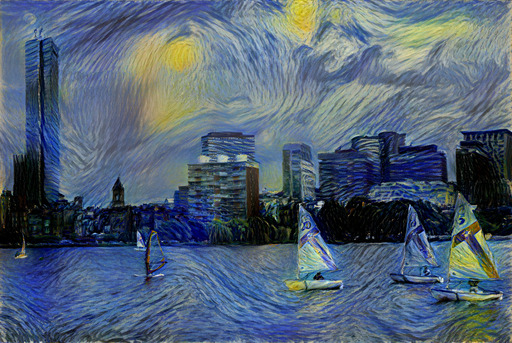

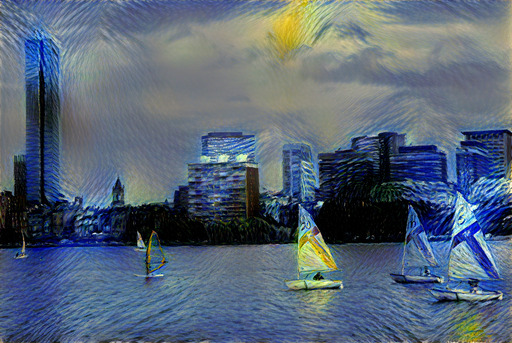

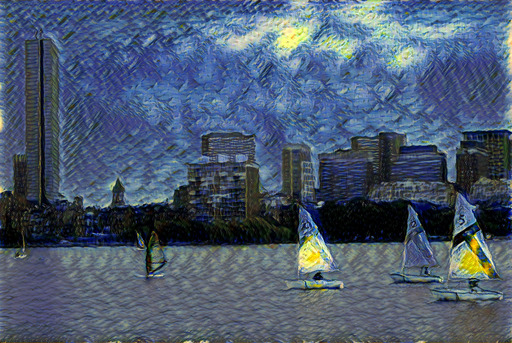

The images at the top of this post were generated with Edvard Munch’s The Scream and a photo I took at the Pittsburgh Zoo in 2017. The images below were generated with Vincent van Gogh’s The Starry Night and a photo I took in Boston in 2015. The image captions indicate the compression rate of the VGG model used for the corresponding style transfer.

|

|

|

| 32-bit float (no quantization) | 8-bit | 7-bit |

|

|

|

| 6-bit | 5-bit | 4-bit |

|

|

|

| 3-bit | 2-bit | 1-bit |

Implementation

I originally decided to compress the model using 6-bit weights, and ran a few additional style transfers to check the quality at this compression level. I modified the code to generate and load VGG models with weights quantized to arbitrary bit widths. Unfortunately, my implementation had a noticeable effect on latency when loading the model, taking almost twenty seconds for a model with weights compressed to 2 bits (I didn’t test for other compression rates, but larger bit widths would presumably take longer).

I subsequently decided to quantize the weights to 8 bits instead of 6 bits, since this allowed for fast processing using PyTorch’s built-in uint8 type. The VGG file size decreased from 80MB to 20MB, well within the 60MB PyPI limit that I originally encountered. Loading the quantized model takes less than 1 second.

References

[1] Simonyan, Karen, and Andrew Zisserman. “Very Deep Convolutional Networks for Large-Scale Image Recognition.” ArXiv:1409.1556 [Cs], September 4, 2014. http://arxiv.org/abs/1409.1556.

[2] Hinton, Geoffrey, Oriol Vinyals, and Jeff Dean. “Distilling the Knowledge in a Neural Network.” ArXiv:1503.02531 [Cs, Stat], March 9, 2015. http://arxiv.org/abs/1503.02531.

[3] Vanhoucke, Vincent, Andrew Senior, and Mark Z. Mao. “Improving the Speed of Neural Networks on CPUs.” In Deep Learning and Unsupervised Feature Learning Workshop, NIPS 2011.

[4] Han, Song, Huizi Mao, and William J. Dally. “Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding.” ArXiv:1510.00149 [Cs], October 1, 2015. http://arxiv.org/abs/1510.00149.